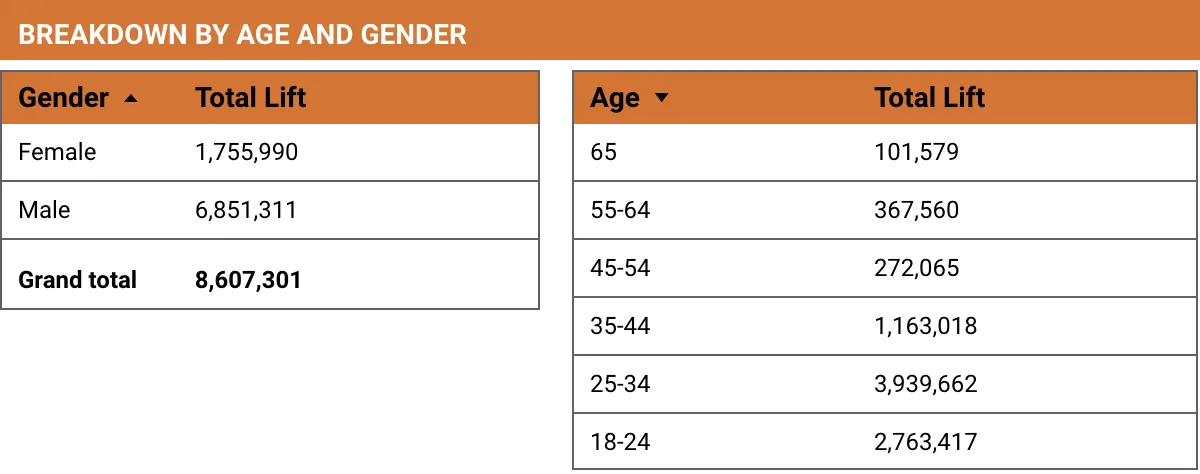

Note that the difference in men and women impacted by the campaigns says less about men and women’s attitude toward COVID prevention behaviors and the vaccines and is simply a factor related to who we reached via the campaign efforts. In countries like Mali, Somalia and Pakistan 80% of our reach was to men because there are more men on the Facebook platform in those countries.

While social media as a social and behavioral change communications tool is still in its experimental stages, we learned a lot about what worked and what didn’t.

Creative Learnings

CARE wrote extensively about some of the creative learnings and successes in our articles “COVID-19 and Social Media: Using Facebook to Drive Social and Behavioral Change in 19 Countries” and “COVID-19 and Meta: Social and Behavioral Change Communication Learnings from 20 Countries”. A few key highlights include:

- The importance of creating for mobile. All creative should be optimized for mobile phones.

- Prioritize graphics or video over heavy text, particularly for areas with low literacy.

- Localize content. Content in regional dialects typically outperformed.

- Optimize video for mobile as well. Format videos for mobile phones, include subtitles, and have the most important messages within the first three seconds and a great thumbnail image that will show in-feed.

- Limit the use of technical language. Posts that utilized a lot of medical or technical terminology did not perform well.

- The need for clear, singular messaging. Images or campaigns that included too many messages typically did not see strong Brand Lift Study results.

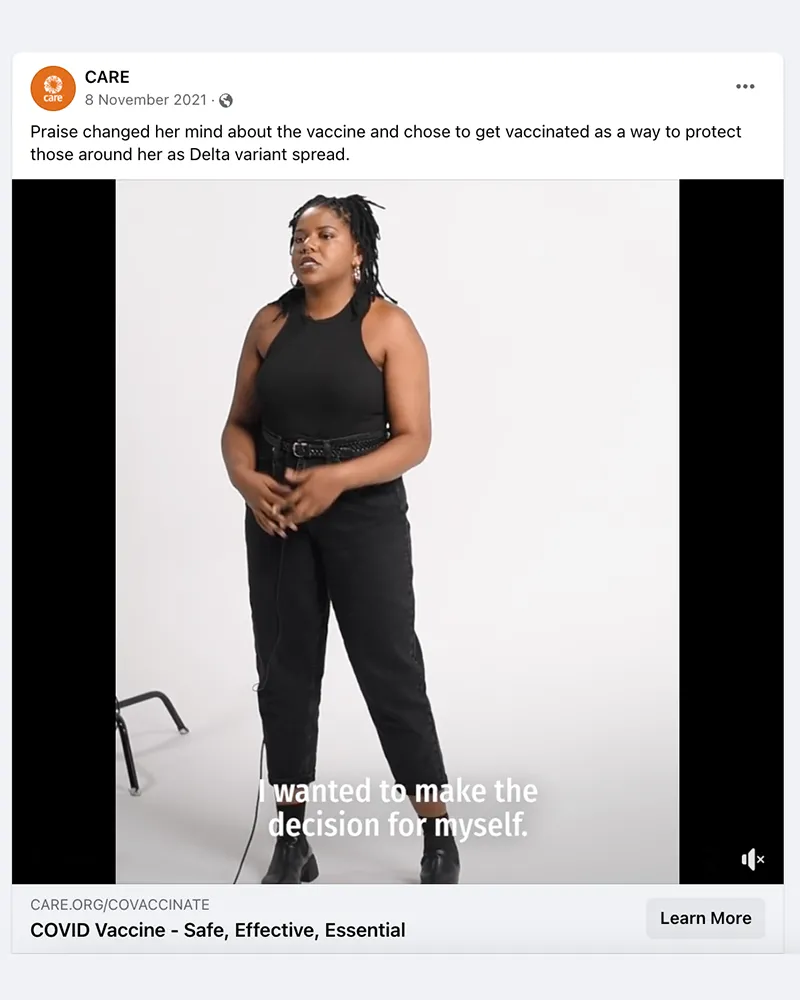

- Creative that was authentic and showcased personal stories, testimonials, or ideas about freedom and liberty performed well globally.

- Creative did not have to be “perfectly constructed” to perform well. Some of the best performing videos were shot on a mobile phone.

- Campaigns that had multiple formats (e.g. static, GIF or short video, long video) seemed to outperform campaigns that only used a singular format.

Brand Lift Study (BLS) Tool Learnings

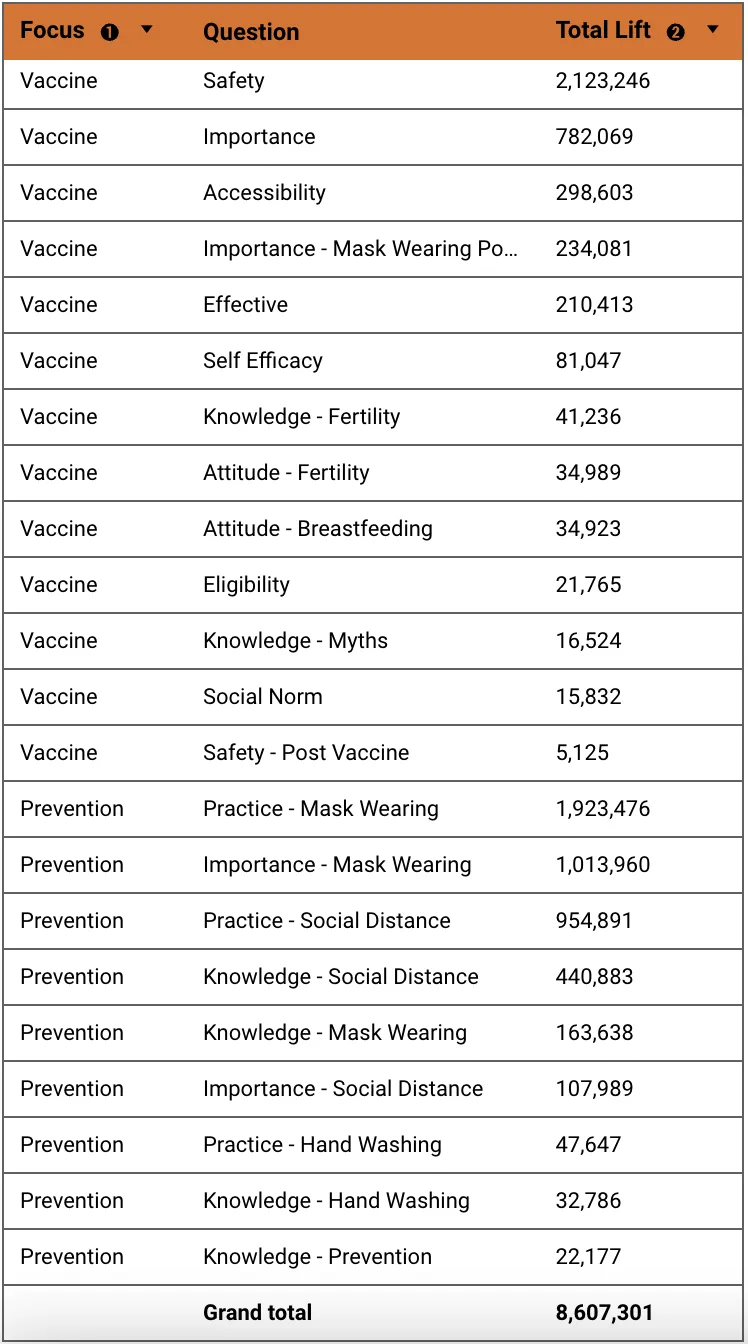

For this initiative one of the key measurement tools used was Meta’s Brand Lift Survey (BLS) tool. While this tool was informative, it posed several measurement challenges, including:

- BLS required a large reach to ensure results were statistically significant. This scale proved challenging for CARE Country Offices who did not have a population of one million on the Facebook platform, much less one million members of their vaccine hesitant population. The scale required also proved challenging when there was a desire to personalize messaging or to target a specific audience.

- BLS only worked well when a campaign was focused on a singular message. Because survey recipients only see one question, campaigns often did not show positive BLS results when the campaign incorporated multiple themes. For example, if a campaign had one ad that focused on mask wearing and another ad that focused on social distancing there was often little to no lift in either one or both questions. This was particularly challenging for campaign designers, as there was often a desire (and need) to talk about multiple themes.

- The campaign objective selected within Facebook’s campaign management tool can change BLS and overall campaign results significantly. For example, campaigns that used an “Engagement” objective were memorable, seeing an average of 12 percentage points more in ad recall while campaigns with a “Reach” objective saw an average of 6 percentage points in ad recall. On average, campaigns with an “Engagement” objective also saw higher average lifts in attitude and behavior questions. A “traffic” objective regularly saw click-through rates (CTR) above one percent (1%), but consistently struggled to reach the scale needed for a BLS.

There is a need for much more testing to understand what levers might drive impact in knowledge, attitude, and behavior changes. Some themes that CARE started to see, include:

- Campaign Objective. Leveraging Meta’s “Engagement” objective seemed to drive higher BLS results, but more testing is needed to determine the value of the objective vs. other campaign objective options.

- Greatest Impact. For online SBCC campaigns, should we focus on one thing at a time? Should we choose only knowledge, only attitude or only behavior change? Should we approach efforts to effect change in all three one campaign and one objective at a time? Could we partner with other organizations and coordinate so that each of us is handling a unique piece of the change puzzle?For example, might CARE focus on attitudinal shifts while partnering with an organization like FHI that has their own vaccine booking system and follows up with stronger behavioral change messaging to book a vaccine appointment?

- Trusted Messengers. How do these change over time or at different stages? Does it depend what the health topic is for healthcare workers to have greater impact? For example, how might the results doctors achieve as trusted messengers vary when offering information on malaria prevention vs. sexual and reproductive health information? In the context of epidemics, might healthcare workers have a greater impact at the onset? Does their influence start to wane if the epidemic is protracted? Will using “everyday people sharing personal stories” always drive more knowledge, attitude, and behavior change?

- Comment Moderation. Continue testing having post comments on/off on controversial posts as well as posts that are moderated (someone curating the conversation) vs. unmoderated (allowing all comments regardless of sentiment or validity). CARE’s analysis explored whether high share rates or high comment rates were a factor in stronger BLS results. However, campaigns with high engagement rates tended to drive higher lifts in BLS results, particularly when there was a high comment rate.

Participating in this project has CARE wondering how social media fits into broader SBCC program plans going forward.

With social media, we can do a few things that aren’t possible with other methodologies, such as:

- Target the message reach to very specific populations (e.g., girls 18-25 living in Dhaka who like cricket)

- Precisely survey individuals who saw our message as well as a control group who didn’t and compare the results relating to shifts in knowledge attitude and behavior – large treatment vs control research.

- Quickly measure performance metrics like views and interactions (and click throughs)

- Iterate and optimize creative in real time

Meta’s BLS tool’s methodology was incredibly helpful. A similar study in relation to our offline methodologies would be prohibitively expensive. That said, there are several things other methodologies afford that social media does not, such as:

- Before and after studies

- Measurement of (not self-reported) offline behavior since we use BLS for self-reported behavior

- Reach people who are not on social media (sometimes a significant part of the population we’re working with, but not always)

- Easy ways to ask questions, get quick answers and ask follow up questions for clarity or additional questions based on responses.

- Face to face community dialogues around the message

This experience has CARE thinking about ways our social media practice could continue to support programmatic outcomes. Could social media work drive change in attitudes towards maternal health, gender equality, or climate?